A further update to "Reading and studying from the screen"

Elevator tale: Like the previous post "An update to "Reading and studying from the screen" updating my 2016 paper "Reading and studying on the screen", this post surveys various articles not previously considered. This time ten articles released subsequent to mine and a further thirteen not included are considered. The additional articles help to portray a much richer series of nuances to what it means to read on-screen and how comparison studies between on-screen and print reading might be furthered.

Earlier this year I posted an update to my 2016 article in the Journal of Open, Flexible and Distance Learning "Reading and studying on the screen: An overview of literature towards good learning design practice". The article considered much, but not all, of the scholarship at the time related to how on-screen education might be advanced in the face of student preference for print. In the professional situations I have worked in over the last decade the issue of whether distance education should be offered digitally or in print has been debated at various temperatures. Given the importance of the matter to digital education, I have purposefully kept an eye out for articles and studies concerned with on-screen reading (though, alas, Wolf's book is still not yet available in Kindle!)

As in my previous post I want to be clear. There are two distinctive issues at play here for me. I feel the need to once again clarify them:

To be still clearer, I am neither saying that print has no part to play in education nor that everything ought to be digital. I am instead advocating for a digitally-based learning design such that print does not become the limiting factor.

Since the previous post I've read a further ten articles subsequent to my own and thirteen not previously considered (none of these are mentioned in the previous update post, either). As in the previous update post I've included an annotated bibliography of the twenty-three articles below. Here are the additional points gleaned from them:

Here are my article notes in support of this post.

|

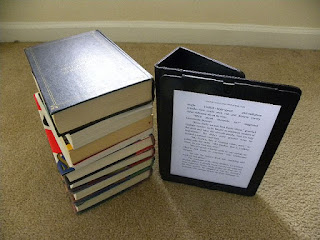

| Image: Hobbies on a Budget, https://www.flickr.com/photos/62030038@N02/ |

- The decision to base design on a digital rather than print foundation is fundamental. Either a learning design is digitally-based, or it is print-based. If it is print-based, then a digital version might be made available however nothing is lost if that digital version is printed. If the design is digitally-based, not all elements might be printable but a significantly greater pedagogical choice becomes possible.

- The actual mix of digital and print resources used for the purposes of study can be a sliding scale. Literature supports the notion of a digitally-based design, with print options available where lengthy reading is required; print options might be reserved for articles, books or book chapters that are narrative (that is, that do not attempt to also serve as learning guides and therefore made the entire design essentially a print-based one).

To be still clearer, I am neither saying that print has no part to play in education nor that everything ought to be digital. I am instead advocating for a digitally-based learning design such that print does not become the limiting factor.

Since the previous post I've read a further ten articles subsequent to my own and thirteen not previously considered (none of these are mentioned in the previous update post, either). As in the previous update post I've included an annotated bibliography of the twenty-three articles below. Here are the additional points gleaned from them:

- There are always multiple variables in play. It's too simple to compare 'on-screen' with print, as there is no single form of on-screen reading. Any study must carefully define its place across these (and likely additional) factors:

- There are multiple types of on-screen text. There are differences across e-text in the form of questions, briefings, short articles, full-length articles and books (and others).

- There are various motivations for reading. Reading aloud to a child is different to reading on a commute, or studying a broad range of scholarly books for research. Why a text would be read is important and may make one or other (on-screen or print) more appropriate.

- On-screen formats are multi-faceted. It might be an HTML page with or without hyperlinks and embedded multimedia; a free-text version of a physical book; a PDF (fixed) version of a physical book; a scanned page. Each have different possibilities related to page metaphor, user control over size and type of font, distraction (or enhancement), text search and selection, and text advance/review.

- Various interfaces exist for on-screen text. There are for example differences in reading from a Kindle app, a web browser and a proprietary e-textbook interface. The extent to which these provide spatial feedback, synchronisation and highlight/annotation options can differ markedly.

- Various devices exist for reading on-screen text. As above there are differences in reading e-text from a laptop screen, desktop monitor, Kindle Fire, iPad and smartphone.

- It is clear that scaffolding on-screen reading can make a positive difference to student engagement. This might even extend to the choice of font, whereby choosing disfluent (more difficult to read) fonts may slow on-screen readers down and improve their understanding (a tentative conclusion from Sidi et al, 2016; see also https://www.wired.com/2011/01/the-benefit-of-ugly-fonts/). Briefing students and defining the parameters under which they ought to read also assists (Sidi et al, 2017), as suggested in my original article.

- Studies (particularly outliers) need to be carefully scrutinised. For example, Kong et al (2013) include an outlier in their analysis (Kim & Kim, 2013) which skews their conclusion. The Kim & Kim (2013) study findings are highly unusual, and there is evidence in their paper that they required students reading on-screen to circle the answers to comprehension questions by circling the correct answer with a mouse(!) This likely explains the higher reading times and added a further layer of cognitive burden to the task. In another study, Lenhard et al (2017), students answering questions on screen were not able to go back to correct any mistakes they might have made by in accidentally choosing an incorrect response.

- There is clearly room for much more research, albeit nuanced along the lines suggested by Mangen & van der Weel (2016) and with reference to the types of reading listed in Mizrachi et al (2018). Testing different forms of reading and applying diff

erent variances as listed above will help to advance the literature and further nuance the overall topic. Focus should be on, as Mizrachi et al (2018, p.3) suggest, "learning from text" (see also 'Gear 2' mentioned in Sackstein et al, 2015). Study design is also important; note Sackstein et al's (2015) decision to let students read in a classroom and not a laboratory. Future studies should also be concerned with the conditions in which students read.

Image: John Jones, https://www.flickr.com/photos/160462157@N08/ - Reading on-screen appears to be a very important academic skill. That almost 50% of half (49%) of doctoral students and over one third (35%) of post-graduate students were found to prefer digital/on-screen in Mizrachi (2015) signals the role of on-screen literacy in education. In the article I wrote that "Effective on-screen reading skills are important for 21st century professionals" (2016, p.34). This is indeed the case for academic development, too. Students who are confident and accomplished on-screen readers will have developed an essential professional skill that will serve them well across their studies and beyond.

- The Porion et al study (2016) gives strong evidence that the issue is not so much on-screen versus paper, but rather seeking to create conditions in which they become at least equivalent. My initial paper provides important clues as to how this 'at least equivalence' might be achieved. From the evidence I've reviewed, academic reading is best without hyperlinks (reducing cognitive overload); every application closed with the exception of that required for the reading task (removing distractions); a simple, clean reading interface; and a tablet device or generous, well-lit monitor. Under these conditions it is highly likely that there would be no significant difference in reading the material on-screen than there would in print. My original paper pp.39-40 gives further guidance.

- The comment on p.35 of the initial paper (footnote) related to the proportion of students preferring on-screen resources is now substantiated in one published sample. Mizrachi (2015), found that, of her respondents, "about 18% agreed or strongly agreed with a preference for reading electronically" (2015, p.305). This nicely matches the "up to 20%" figure I included in the paper. The same study by Mizrachi further found that an electronic version of a reading of less than five pages would be preferred by 47.7% of respondents.

- Some 80-90% of the undergraduates in the study use laptops for on-screen reading, with only about 28% using tablets (Mizrachi et al, 2018). I find this concerning. As outlined in the factors section above, there are various devices students might choose; laptops are far from optimal. I wonder whether reading from a laptop is part of the problem students have with on-screen reading? Laptops are not portable reading devices. Tablets are far better, and on-screen readers now have extremely flexible options (see my own setup below). The Mizrachi (2018) and Mizrachi et al (2018) studies have me wondering. Is it time to purposefully to explore how those reading on-screen actually behave? I think it is time we considered not only which devices on-screen readers are using but also how, when and why they use them.

My interest in the question mentioned above stems from my own experience. Acknowledging I'm likely an outlier, I use at least two devices in my on-screen reading. The first and primary device, a Microsoft Surface 3, serves a triple function as a desktop, laptop and tablet. In the photo you can see how I've optimised my on-screen study format; three monitors, one portrait (I find it easiest to read extended works as I study using that screen). When reading on-screen I can add notes in OneNote (main landscape monitor) and include anything I might need to reference in the lower right (Surface) screen.

When I want to take the tablet to the sofa to read, I just unplug the Surface from its magnetic dock and do so; I can also throw on the magnetic keyboard (bottom left of the picture) if I also want to take notes as I read, though the stylus enables me to quickly highlight text without the keyboard. I also have a Kindle Fire, which is fantastic for reading (and annotating) Kindle eBooks. It synchronises perfectly with my Surface and is fully portable. Access to these devices facilitates multiple on-screen reading possibilities, adding to flexibility and increasing my ability to read much more effectively on-screen. It's a reasonably costly setup, but it really works!

I now read almost exclusively on-screen. I have workflows for on-screen readings and books, and I make use of multiple monitors to improve my ability to read, highlight, take notes (including extended quotations) and draft writing. I buy books now on Amazon, where I make the most of the fact that my Kindle Fire synchronises with my PC (including all notes). I read and annotate PDF articles in Mendeley, which assists me in keeping track of articles and locating new ones. Mendeley is very simple to use and features PDF annotation tools that work extremely well. I close my email client when I work. I purposefully keep my internet browser closed as well. The only other applications open are Spotify, which provides me with a gentle set of background classical music, and OneNote for taking notes and preparing drafts.

Personally I prefer the convenience, cost-effectiveness, portability and manipulability of on-screen text.

I was very interested in the Zhang & Kudva finding that combining e-books and print books is reflective of high reading volumes. At the close of 2018 I was surprised to find that I have already read over 43 books this year (excluding bible and devotional reading; admittedly, 'discovering' Terry Pratchett helped boost my reading). While not all of the reading has been enlightening, I can comfortably say that I am a confident print and on-screen reader. I'm proud to say that not one scrap of paper was consumed in the preparation of this review!

Here are my article notes in support of this post.

Chen, D.

W., & Catrambone, R. (2015). Paper vs. screen: Effects on reading

comprehension, metacognition, and reader behavior. In Proceedings of the Human Factors and Ergonomics Society (Vol.

2015–January). https://doi.org/10.1177/1541931215591069

- Very good introduction section

- Metacognition comparison results are not provided (lack of space cited)

- A study of 92 participants (students aged 18+) randomly assigned across two independent variables: study medium (text on paper, or a screen) and in-text prompts (metacognitive or non-metacognitive)

- Three text sections of human interest made up of around 1,000 words each were used. Print participants took more notes, but there was no significant difference in comprehension across print and on-screen groups

- The on-screen treatment used "a standard computer monitor" (not specified, likely LCD)

- Two questions were included at the end of each passage, one short-answer and the other multiple-choice. Participants were told they had unlimited time and their actions were highly structured

- The authors note that their "results are in line… with recent trends in the 'paper vs. screen' literature, which demonstrate that differences in how people read on screen and on paper are diminishing as technology becomes more commonplace" (2015, p.334). Technology familiarity is likely one key reason

- There was no significant difference across both on-screen and print groups related to their predicted performance (neither group were impressive at it!)

- The authors identify a preference for printed text from across their sample

- The authors conclude that their study "did suggest that screen participants performed more efficiently by achieving comparable comprehension and POP accuracy while doing less work than paper participants" (2015, p.336)

- This study is an excellent introduction to the literature. Its conclusions match those of my synthesis article. The authors speculate that younger readers are likely to be more confident reading from the screen, even though their preference is print; it is particularly of interest that the on-screen readers performed as well as the print ones, even though the print readers took more time (and more notes). It is disappointing that the metacognitive results were not included (I have not been able to track them down, so they may be unpublished).

Clinton,

V. (2018). Savings without sacrifice: a case report on open-source textbook

adoption. Open Learning: The Journal of Open,

Distance and e-Learning, 1–13. https://doi.org/10.1080/02680513.2018.1486184

- The COUP (Costs, Outcomes, Use, Perceptions) framework was used to establish the experiences of undergraduate psychology students (N=520) using a commercial, or open source textbook. Students using the open source text tended to use an electronic version

- Open text costs were lower, and there were no outcome, use or performance differences across the groups

- Other conditions were fixed; the only change across course performance from one course to the next was the change of textbook. This is a comparative study of the same course across two student groups, one after the other (commercial textbook n=316, open source [OS] textbook n=204). Student groups were broadly similar, but more females in the OS group; less withdrawals across the OS group; and much higher (91%) electronic textbook access in the OS group. Students in the OS group also had a higher overall grade point average, though taking this into account "performance appeared to be approximately equivalent" (2018, p.185)

- Very solid survey participation (86% of students in commercial, 91% in open source textbook groups). Significant differences across cost (OS much cheaper), visual appeal (in favour of commercial) and written style (in favour of OS)

- Students enjoyed "the writing, electronic access, and electronic features… [of] the open-source textbook" (2018, p.184), though there was additional comment made about "a dislike of reading from screens" (ibid.)

- The study concludes that "the findings from this case report indicate that the open-source textbook reduced the financial burden of a college education without negatively influencing student learning, student perceptions of quality, or use of the textbook" (2018, p.186)

- This study is a valuable one primarily because of its methodology (comparison study), also for its counter-intuitive findings. Open source texts - used predominantly online - have increased student retention and reduced costs to students, without hindering their performance!

Dobler, E. (2015). E-textbooks: A personalized learning

experience or a digital distraction? Journal of Adolescent and Adult

Literacy, 58(6). https://doi.org/10.1002/jaal.391

- Discusses the additional multi-media potential for e-textbooks, and their cross-device appeal

- Also overviews the different options for e-textbooks in terms of whether they provide links, multimedia, ability to take notes and highlight, social networking possibilities and access to a built-in dictionary

- The study seeks to answer three questions (2015, p.483):

- What are the e-textbook preferences of under-graduate teacher education students?

- What are the perceptions of undergraduate teacher education students toward reading an e-textbook?

- How do undergraduate teacher education students view the role of an e-textbook in their learning process?

- The literature review highlights the "mixed response" (2015, p.484) to questions of how well e-textbooks influence student comprehension, and that advancing technology calls into question the relevance of earlier studies

- The decision of an e-textbook or printed one need not be binary

- Sample consisted of 56 preservice teachers (8 male, 48 female) in a course associated with literacy; the course made use of an e-enhanced e-textbook. Half of the sample had already had experience with an e-textbook

- Pre- and post-questionnaires and a focus group were used to gather data

- The teacher gave an overview of how to engage with the e-textbook in an early class session

- Findings:

- 22% of respondents preferred an e-textbook at the beginning of the class and 50% preferred it at the end; print preference moved from 58% to 42%

- Digital note sharing was popular, though some students reported "eyestrain with screen reading", "too many distractions when reading online" and "connection to physical movement of page turning" as barriers (2015, p.488)

- 25% of respondents thought that the e-textbook had a negative influence on their cognitive engagement (64.5% a positive one); various elements of the e-textbook actively encouraged engagement

- One interesting quote: "Sometimes I forgot I was reading a textbook. I had to train my brain to think critically when reading because usually when I’m on a device it’s for recreation.”" (2015, p.488)

- Reading self-regulation was an important factor

- The article suggests that teachers have an important role to play in supporting their students' adoption of and effective use of e-textbooks: "Teachers should not assume that because many students appear to be adept at using digital devices, along with texting and online social networking tools, that they are adept at comprehending electronic texts" (2015, p.489), and "Teachers and students should expect the shift from print text to e-text to take time, instruction, and practice" (2015, p.490).

- The article is further evidence that e-textbooks are becoming more viable as at-least-as-good-as print ones. Many students, upon trying an e-textbook, became more convinced of their suitability. The student quote cited above from p.488 is insightful, and provides part-evidence for the importance of orientating students to the effective use of e-textbooks. Orientation is the first if my own recommendations: "Orientate students to the potential dynamics of on-screen reading, making them more deliberate and focused about their reading behaviour" (Nichols 2016, p.39).

Gerhart,

N., Peak, D., & Prybutok, V. R. (2017). Encouraging E-Textbook Adoption:

Merging Two Models. Decision Sciences Journal

of Innovative Education, 15(2). https://doi.org/10.1111/dsji.12126

- The articles aims to "promote understanding of e-textbook adoption, to support their beneficial use, and to seek new insights into student e-textbook adoption behavior" (2017, p.193)

- Findings suggest that "the most important factors for encouraging e-textbook adoption: perceived usefulness, habit, and hedonic motivation" (2017, p.212), the latter meaning "relative pleasure of using e-textbooks as compared to traditional textbooks" (2017, pp.196-198).

- The article suggests that price is not an important factor for e-textbook adoption and suggests that the three identified factors should be emphasised in the adoption of an e-textbook.

Gueval,

J., Tarnow, K., & Kumm, S. (2015). Implementing e-books: Faculty and

student experiences. Teaching and Learning in

Nursing, 10(4). https://doi.org/10.1016/j.teln.2015.06.003

- A curriculum revision in a nursing programme in a direction where no traditional textbooks were available required the use of various e-books

- In their review of literature the authors note that "Increased use of e-books improves satisfaction with using them" (2015, p.181)

- Research questions (2015, p.182):

- Does student and faculty satisfaction with e-books increase with increased use over time?

- Do students and faculty report more productive learning with increased use of e-books over time?

- Is student and faculty initial self-classification of ability to use e-books associated with satisfaction and ease of learning with e-books?

- Email questionnaires and focus groups were used for data collection; the convenience sample consisted of "The convenience sample consisted of all incoming junior baccalaureate nursing students and the faculty assigned to teach this cohort in their first semester of nursing" (2015, p.182), made up of 69, 36 and 19 respondents respectively to the first, second and third surveys (only 13 completed all three); average student age was 23.2 years, 97% female

- Declining participation may have been the result of students not recalling their unique identifiers, and proximity of surveys to assessment times

- Findings are not well reported and appear internally contradictory, though students did perceive their proficiency at using e-books increased over time (however note the declining survey participation rate); not enough data was evident to address the third research question

- Barriers and benefits to adoption are consistent with other findings

- The study is transparent in stating that "Limitations of this study include the small sample size and the limited diversity of sample populations. The data were self-reported, making it less reliable" (2015, p.185)

- This study highlights the importance of student orientation, otherwise adds little to the overall literature. It is appropriately included in a nursing educators' journal; the limitations cited above are worth noting for any seeking to research in this area.

Kalyuga,

S., & Liu, T. C. (2015). Guest Editorial: Managing cognitive load in

technology-based learning environments. Educational

Technology and Society, 18(4). https://doi.org/10.2307/jeductechsoci.18.4.1

- The editorial overviews a special issue of the ETS journal concerned with cognitive load theory in technology-based learning environments

- Cognitive load theory is concerned with the relationship of limited working memory to "effectively unlimited long-term memory storage schemas" (2015, p.1)

- Cognitive load is "defined as working memory resources required for completing a learning task or activity by learners with a specific level of prior knowledge… the magnitude of cognitive load is determined by the degree of element interactivity – interconnectedness between the related elements of information that need to be processed simultaneously in working memory" (2015, p.2)

- There are two types of cognitive load: intrinsic, and extraneous. Intrinsic is "the load that is relevant to achieving the intended leaning goals", whereas extraneous is "relevant to learning and is present only because instruction is designed in a way that requires learners to engage in cognitive processes and activities that are not actually required for acquisition of intended schemas" (2015, p.2). A further element is the germane working memory resources allocated to the intrinsic learning task

- Worked examples decrease cognitive load; redundant information increases it

- The editorial is a useful primer in cognitive load as it relates to the use of technology, and so provides some effective background reading for those not familiar with the concept.

Kim, H.

J., & Kim, J. (2013). Reading from an LCD monitor versus paper: Teenagers’

reading performance. International Journal of

Research Studies in Educational Technology. https://doi.org/10.5861/ijrset.2012.170

- Tests reading performance across print and scrolling on LCD screen

- Sample of 108 high school students; significantly higher comprehension from print (average scores 76 and 61 respectively)

- On-screen also took longer; 16 minutes compared with 10 for print

- Some very early citations, one citation out of chronological order but NSD acknowledged

- Activity took place in class time

- Treatment involved scanned facsimiles of two tests; students had to answer ten multiple choice questions "by circling the correct multiple-choice answer, whether it was with a pencil or with the mouse) (2013, p.18), indicating a difficult interface and poor choice of electronic representation [my note]; reading time and answering time not distinguished in results

- Interestingly, "Paper-preferred teenagers received average scores of 61.59 and 79.30 on the electronic and paper tests respectively while LCD-preferred teenagers received average scores of 54.65 and 56.24 respectively on the two tests" (2013, p.21). There appears something about the LCD vs print preference that has widely influenced results. Also, the findings run counter to the more general finding that "the majority of students indicated they would prefer to test on computer" (2013, p.21)

- This paper is likely hiding various methodological flaws, or else is unhelpfully unclear; frustrating, as its findings are clear outliers. Even where findings are in favour of print the margin is never this stark. The authors themselves note that "a 36% slower reading speed on LCD monitors is even worse than the findings of the previous studies that reported a 15-30% slower reading speed when reading from a computer screen relative to paper" (2013, p.21). Requiring students to circle the correct answer in an electronic document using a mouse(!) may be one explanation. The difference in average score across the two groups of 15 points in a multiple-choice test requires more explanation than is offered. Until these questions are addressed, it is likely that these outlier findings may be best ignored.

Kong, Y.,

Seo, Y. S., & Zhai, L. (2018). Comparison of reading performance on screen

and on paper: A meta-analysis. Computers and

Education, 123. https://doi.org/10.1016/j.compedu.2018.05.005

- The study is a meta-analysis (and synthesis) of 17 studies since year 2000 concerned with comparing reading comprehension and speed when reading on-screen and in print (the 17 selected from an initial list of 416)

- The study uses robust variance estimation (RVE) meta-analysis models to determine effect size across the studoes

- The study finds "reading on paper was better than reading on screen in terms of reading comprehension" (2018, p.138) however also concludes that after the year 2013 "the magnitude of the difference in reading comprehension between paper and screen followed a diminishing trajectory" (ibid)

- A very good review of literature

- The studies came from varying countries, sample demographics and electronic device used; "Total sample size was 4831 for reading comprehension and 1359 for reading speed" (2018, p.142)

- The study checked against publication bias, whereby findings with "non-significant findings, small effects, or small sample size" are less likely to be published

- The meta-study concluded that "readers had significantly higher comprehension scores when reading on paper than reading on screen" (2018, p.143) and there were "no differences in terms of reading speed between reading on screen and reading on paper" (2018, p.145)

- The authors suggest that familiarity with print and cognitive load might explain the differences in cognitive performance however they also note that in studies post 2013 "the magnitude of the difference in reading comprehension between paper and screen follows a diminishing trajectory" (2018, p.147)

- The authors suggest that font, layout, psychological and broader demographic factors should be of interest to future researchers

- Half of the studies drawn on in this article are referenced in my original paper. At first glance the findings look decisive, though one outlier - the 2013 Kim & Kim study - stands out and is acknowledged by the authors to be an outlier. The Kim & Kim article (available from https://www.learntechlib.org/d/49786) was not included in my initial article, and on reviewing it the conditions of the experiment being incentivised and conducted in class time may have skewed results. Excluding it from analysis will likely have changed the overall outcome of significance in favour of cognitive outcomes from print. Despite this, the conclusions of my first article still stand.

Lenhard,

W., Schroeders, U., & Lenhard, A. (2017). Equivalence of Screen Versus

Print Reading Comprehension Depends on Task Complexity and Proficiency. Discourse Processes, 54(5–6). https://doi.org/10.1080/0163853X.2017.1319653

- Tests children from grades 1 to 6 on test conditions on-screen and in print, under time constraints

- Generally children doing the test on-screen "worked faster but at the expense of accuracy" (2017, p.427)

- Cites a 2008 synthesis paper as finding "no statistically significant administration mode effect of PP versus CB tests on kindergarten through 12th grade students’ reading achievement scores" (2017, pp.428-429)

- Notes that findings of NSD may be subject to factors related to learners, the hardware used, and the treatment interface

- Students in the CB (computer-based) treatment did not have opportunity to correct answers mistakenly entered

- Sample was 5073 students from 71 schools and 351 classes; to get a representative sample across sociodemographic averages the final sample was 1520 in print-based and 1287 in computer-based treatments

- Each student was given only one of the two tests

- In the computer-based treatment there was no scrolling; 75 items had to be answered in three minutes

- The authors suggest their findings are in part explained by the fact that "usage of the mouse, in addition to influencing the raw scores, can contribute to higher error rates in the CB condition. Clicking the mouse only needs a tiny finger movement, whereas marking the answer with a pencil requires movement of the whole hand or even arm" (2017, p.439), adding that "the missing possibility to revise answers in the CB condition seems to be most important [factor]" (ibid)

- Another frustrating comparison. The authors conclude that "equivalence across different test modes of a particular psychometric instrument cannot be assumed without careful scrutiny of the test data" (2017, p.440), which surely also applies to their own findings. Students in the computer-based treatment not being able to correct any mistakes they have made seems a very obvious problem with the study. There is no indication as to whether or not students doing the print-based tests were able to correct their answers.

Mangen,

A., & van der Weel, A. (2016). The evolution of reading in the age of

digitisation: an integrative framework for reading research. Literacy, 50(3).

https://doi.org/10.1111/lit.12086

- The authors suggest a need for "an integrative, transdisciplinary model of embodied, textual reading accounting for its psychological, ergonomic, technological, social, cultural and evolutionary aspects" (2016, p.116)

- Reading, it is claimed, is displaced when digitised to become represented by "an increasing complexity of multimodal, dynamic, and interactive representations" (ibid), which in turn "invites closer scrutiny of associations between ergonomics (sensori- motor, haptic/tactile feedback), attention, perception, cognitive and emotional processing at different levels, as well as subjective experiential dimensions of reading different kinds of texts for different purposes" (2016, p.117)

- Authors suggest a link between introducing children to on-screen reading and changes to reading habits; it is unclear "what the longer-term sociocultural and cognitive effects of the changes in reading practice might be" (2017, p.118)

- The authors highlight that on-screen and print reading have different affordances, and are concerned that these may in turn influence what it means 'to read'

- The authors propose a framework of reading along the following main dimensions: ergonomic; attentional/perceptual; cognitive; emotional; phenomenological; sociocultural; and cultural-evolutionary

- The authors propose a series of frameworks across which the dimensions might be tested (reproduced here in full):

- Substrate: paper vs screen-based reading devices (e.g. e-readers, tablets, computer screens and smart phones), audio–visual features and haptic/tactile feedback;

- Interface characteristics (e.g. one or two-page dis- play, page turning, thickness, weight and bendable/flexible screens);

- Text: length, type of text (e.g. genre and complexity: narrative, expository), layout and structuring;

- Levels of comprehension: from surface (word and sentence) to deep inferential comprehension;

- Time of recall: short-term vs long-term memory;

- Readers: age, socio-cultural background, gender, ex-pert level (e.g. students, children vs adults, women vs men, beginning vs advanced and ‘digital native’ vs ‘digital immigrant’);

- Motivation and purpose of reading (e.g. study, leisure, contemplation, light entertainment and news) (p.121).

- This is an important paper for framing the context of the on-screen versus print debate. It correctly expands what seems on the surface to be a fairly simple comparison into its component variability. Taking various of these settings for granted may skew the overall results of a general study. Some of these might be the basis for experimental testing in their own right.

Mizrachi,

D. (2015). Undergraduates’ Academic Reading Format Preferences and Behaviors. Journal of Academic Librarianship, 41(3). https://doi.org/10.1016/j.acalib.2015.03.009

- An investigation into students' preferences of on-screen or print

- Sample is 400 students at the University of California, 8% of population; mean age of 20.55

- No real surprises in outcome - an overwhelming preference for print, though factors such as "accessibility, cost, complexity and importance of the reading to the course affect their actual behaviors" (2015, p.301)

- The study cites research indicating that preference for print declines by qualification. It is at its highest for post-doctoral researchers (49%), then graduate students (35%), down to under-graduates

- The authors note that print was preferred for reasons that "included eyestrain from reading on the screen and too many online distractions, but most frequently they stated that they learn the material better when reading in print" (p.303)

- Device choice: "nearly 90% of the undergraduates in this study (n = 350) use laptops for reading their electronic course readings, by far the most common device. Responses for phones and iPads/tablets are nearly identical: almost 28% (n = 109) and 26.4% (n = 103) respectively"

- Respondents listed distractions as an issue with on-screen reading

- Of the participants, "about 18% agreed or strongly agreed with a preference for reading electronically" (2015, p.305) though if a reading is less than five pages 47.7% agreed or strongly agreed that they would prefer an electronic version

- Most (68%) of respondents disagreed that they preferred electronic textbooks

- In the discussion, the authors claim that students prefer print for applying deep-learning strategies

- This is a highly citable paper that gives nuanced insight into students' overall preference for print (which is not in itself a new finding). Details as to the volume of students preferring on-screen resources, and the higher preferences for on-screen for readings of five pages or less and increasing with qualification level are important additions to the picture. It could be said that overall more students prefer print, but some student groups and different types of reading swing the preference more toward on-screen. The paper is interesting in that the term 'deep' is not directly investigated, yet the authors conclude that students prefer print for applying deep learning strategies. As an additional note, that 90% of undergraduates use laptops(!) for their on-screen reading goes a long way to explaining why it may not be so popular! Tablets are much easier to position for reading and are more portable.

Mizrachi,

D., Salaz, A. M., Kurbanoglu, S., & Boustany, J. (2018). Academic reading

format preferences and behaviors among university students worldwide: A

comparative survey analysis. PLoS ONE. https://doi.org/10.1371/journal.pone.0197444

- The study is a major, international one of the academic reading preferences of students (n=10293)

- The major finding is that "the broad majority of students worldwide prefer to read academic course materials in print" (2018, p.1)

- As with most studies, one of the reasons print is preferred is that students self-report learning better from print

- The authors cite studies "over the last decade suggest that the presentation format of a text, either print or electronic, affects deep learning strategies, retention, and focus capabilities" (2018, p.3)

- The study makes use of the ARFIS (academic reading format international study) instrument

- The student sample is drawn from first-year through to doctoral level, and is based on self-reported preferences and behaviours

- Laptops (80.9%), phones (36.83%) and desktops (30.54%) are the most popular devices

- Overall, worldwide and across all age groups, students prefer print; some 69% of respondents would print electronic material

- Students are more likely to highlight and annotate printed material (83.6%, compared with 24.11 for on-screen)

- "Respondents of a higher academic rank show slightly lower rates of print preference" (2018, p.23), which the authors explain in terms of an accumulation of experience with on-screen reading and the nature of their reading tasks

- On-screen reading is "more acceptable for shorter texts than longer ones" (2018, pp.27-28)

- The authors suggest that "Instructional designers could work towards helping students acquire the prerequisite knowledge to leverage digital texts through more explicit instruction on the navigation of e-formats" (2018, p.28)

- Again, a citable study. Device choice is interesting here; laptops, phones and desktops are not the optimal choices for on-screen reading! Interesting that the increased preference for on-screen for those 'of higher academic rank' is explained in terms of reading maturity and the nature of the research being done. It's also possible that students at this level simply don't have much choice and are learning to prefer the format they are generally required to work with. Having to locate and engage with journal articles, specialist books and electronic library searches lends itself to using bibliographic software for complex bibliographies. It’s relatively easy to slip into on-screen reading under these conditions, particularly as the laptop is a major means of writing research theses.

Myrberg,

C., & Wiberg, N. (2015). Screen vs. paper: what is the difference for

reading and learning? Insights the UKSG Journal,

28(2). https://doi.org/10.1629/uksg.236

- Considers preferences for e-textbooks across students; the authors suggest interface is the critical element of e-textbook uptake

- From their review of literature the authors suggest that "the problem with screen reading is more psychological than technological… [but] medium preferences matter, since those who studied on their preferred medium showed both less overconfidence and got better test scores" (2015, p.51, citing Ackerman & Lauterman)

- The authors cite a study by Stoop et al (2013) evidencing that "enhancing the electronic text instead of just turning it into a copy of the printed version seems to have helped the students to score higher on [a] test" (2015, p.52)

- The authors list some of the barriers to the interfaces used by e-textbooks: "the apps for e-reading lack the ability to present essential spatial landmarks, they give poor feedback on your progress as you read, and make it difficult for you to plan your reading since they do not show how much is left of the chapter/book in a direct and transparent way. Other drawbacks are that usually, the reading applications do not sync between devices and it is not always possible to adjust the text to the screen" (2015, p.53)

- This brief synthesis piece rightly focusses on the importance of the actual user interface of on-screen text. The challenge is to provide spatial landmarks without clutter (which increases cognitive load). The point about synchronisation is very well made.

Park, E.,

Sung, J., & Cho, K. (2015). Reading experiences influencing the acceptance

of e-book devices. Electronic Library, 33(1). https://doi.org/10.1108/EL-05-2012-0045

- Evaluates respondents' perceptions of e-book devices (219 respondents from "a large private university", 2015, p.124; mean age of 22.8 years)

- Key determinants of acceptance are: "viewing experience, perceived mobility, perceived behavioral control, skill and readability. Also perceived usefulness and text satisfaction…" (2015, p.120)

- iPads, Samsung Galaxy Tabs and Interpark Biscuits were the devices used by participants; all were used for ten minutes by all participants in random order

- Participants had positive perspectives toward e-books

- Not much is added by this article, though the focus on e-reader devices is novel.

Porion,

A., Aparicio, X., Megalakaki, O., Robert, A., & Baccino, T. (2016). The

impact of paper-based versus computerized presentation on text comprehension

and memorization. Computers in Human Behavior,

54. https://doi.org/10.1016/j.chb.2015.08.002

- Comprehension and memorisation, on-screen versus print, for students in their third or fourth year of secondary school (n=72, mean age of 13.9 years)

- No significant difference, with the caveat "if we fulfil all the conditions of paper-based versus computerized presentation (text structure, presentation on a single page, screen size, several types of questions measuring comprehension and memory performances), reading performances are not significantly different" (2016, p.569)

- The treatment removed any necessity for scrolling (a large screen was used; only a single page was necessary), and text was optimally structured (following a hierarchical structure)

- The authors note the divergent findings and conclusions form the literature on the subject

- The treatment consisted of about 1000 words

- The authors note that "the task (reading to understand) had a greater impact on performance than the presentation medium" (2016, 574)

- This study serves as a good baseline. Ceteris paribus, on a single page there is no difference across the two media. The small number of words, the large screen and the lack of pagination seem ideal conditions for a firm NSD. The author note that "the task (reading to understand) had a greater impact on performance than the presentation medium" (2016, 574) is not necessarily substantiated by the actual findings.

Rouet, J.

F. (2009). Managing cognitive load during document-based learning. Learning and Instruction. https://doi.org/10.1016/j.learninstruc.2009.02.007

- The article is an editorial to a special issue on the subject of cognitive load

- The model described proposes three contributors to cognitive load in learning: the student understanding of task; the tools and information sources available in the environment; and the capabilities of the individual learner

- The author notes that "richer, more sophisticated environments are not necessarily more learning- effective" (2009, p.447)

- The author clearly states that "Instructions and practice play an extremely important part in shaping the learner’s studying strategies" (2009, p.448) and concludes that "researchers interested in studying the effects of instructional design parameters on cognitive load have to make a number of decisions regarding the task setting: how to prepare students to the core learning activity (training), what directions to provide, how much time to allow, what adjunct sources of information to include, and so forth" (2009, p.449)

- The editorial introduces a special issue on the subject of cognitive load as it relates to document-based learning. The comments correlate to the recommendations in my original article.

Sackstein, S., Spark, L., & Jenkins, A.

(2015). Are e-books effective tools for learning? Reading speed and

comprehension: iPad®i vs. paper. South African

Journal of Education, 35(4). https://doi.org/10.15700/saje.v35n4a1202

- Study compares reading from an iPad with reading from print, in an academic context; sample was 71 students (55 grade 10, 16 university students); all students were exposed to both iPad and print treatments; some students had prior experience with learning from iPads; high proportion of computer (94.7%) and tablet (47.4%) ownership

- Comprehension was measured across three levels: literal, inferential and evaluative

- Treatment was in the "natural school setting and not in an artificial laboratory treatment" (2015, p.4)

- Findings: NSD across reading speed and comprehension; students who had previously used iPads in education read significantly faster than they did in print; however, print results were slightly better on average

- More detailed findings suggest much better results for print across some question types and with some participant groups (not picked up by authors) however "comparisons of the test scores across the media revealed no significant differences in comprehension scores between the iPad and paper for any of the experimental groups" (2015, p.11)

- The authors note that "a fairly small sample was used for this study, which may decrease the generalisability of the results" (2015, p.11) and summarise that from their evidence "e-books do not compromise either reading speed or comprehension of students within their academic environment, but may in fact be effective tools for reading and learning" (2015, p.12)

- This study is interesting in that it focuses on the use if iPads under classroom, not laboratory, conditions. Interesting, too to note that students already exposed to using iPads educationally read significantly faster without adverse effect.

Sidi, Y.,

Ophir, Y., & Ackerman, R. (2016). Generalizing screen inferiority - does

the medium, screen versus paper, affect performance even with brief tasks? Metacognition and Learning, 11(1). https://doi.org/10.1007/s11409-015-9150-6

- The study considers how 264 undergraduate engineering students engage with short math problems presented on-screen compared with in print; "there were no performance differences between the media" (2016, p.15)

- The authors acknowledge findings of NSD and note that "the majority of studies that found screen inferiority have used reading comprehension tasks involving a substantial reading burden" (2016, p.16)

- While recognising the concern that working with computers and digital text may reduce cognitive processing, the authors counter that "recent studies have demonstrated elimination of screen inferiority by activities that encourage in-depth processing" (2016, p.17)

- The study aims to demonstrate "whether challenging tasks that require recruitment of mental effort, yet involve a minimal reading burden, also show screen inferiority in performance and/or metacognitive processes" (ibid); the study requires participants not so much to read, but to process information provided by the screen and in print

- Participants preferred print, yet on the self-paced task whereby participants were not permitted to draw or take notes, there was NSD across the media

- A second experiment combined the above with the introduction of a confidence rating, n=117; while there was higher confidence on screen, again there was NSD

- The authors note that "when the reading load is reduced, performance differences can be eliminated" (2016, p.27)

- Disfluent fonts improved the on-screen participants beyond any other treatment (including fluent font in print); disfluent fonts cause on-screen readers to be more cautious and less confident, which seems to improve comprehension (disfluent fonts can be read, but require more effort)

- The authors found that "judgments were less sensitive to variability in performance (generated by the fluency manipulation) on screen than on paper" (2016, p.29) but they caution that "it would be rash to derive decisive conclusions for the effects of perceptual fluency on performance in the two media" (ibid)

- This paper helpfully extends the definition of 'reading' to include acting on instructions and thinking about responding to a problem. No significant difference! The finding about font type is also useful; making on-screen readers more self-conscious seems to improve their engagement with text.

Sidi, Y.,

Shpigelman, M., Zalmanov, H., & Ackerman, R. (2017). Understanding

metacognitive inferiority on screen by exposing cues for depth of processing. Learning and Instruction, 51. https://doi.org/10.1016/j.learninstruc.2017.01.002

- Three experiments comparing on-screen and print comprehension by manipulating cognitive pressure. The first experiment (n=103 university students) manipulated time frames; the second (n=72) seeking to reduce perceived task pressure; the third (n=113) was based on a problem-solving task

- Treatment involved text of less than 100 (one hundred) words

- The authors cite research indicating that "metacognitive processes are sensitive to contextual cues that hint at the expected depth of processing, regardless of the reading burden involved" (2017, p.61), which means that "employing simple task characteristics allow eliminating screen inferiority altogether" (2017, p.63)

- No significant difference was found when a limited time frame was applied; without time pressure, on-screen readers were over-confident. "Notably, screen inferiority was found only when the time limit was known in advance, but not when participants were interrupted unexpectedly after the same amount of study time" (2017, p.62)

- The authors suggest that "the lengthier the text, the more it is susceptible to the technological disadvantages associated with screen reading (e.g., eye strain)" (2017, p.63)

- Experiment one: time pressure made print reading more efficient and on-screen readers were the most overconfident; "although cognitive processing can be effective on screen, and sometimes even better than it is on paper, time pressure impedes cognitive processing on screen in particular" (2017, p.66); the authors found that "the superiority of working on screen under the ample time condition in terms of success and efficiency" (2017, p.69)

- Experiment two: perceived pressure was removed; "All participants solved the problems under a loose time frame, in the same manner as the parallel condition in Experiment 1, which did not generate screen inferiority" (2017, p.66)

- Experiment three: a problem-solving experiment which required participants to read three words and deduce the word common to them all; there was evidence found of "greater overconfidence on screen than on paper under time pressure even with stimuli that entailed reading only three isolated words, although no efficiency or success rate differences were found" (2017, 69)

- Overall, the authors write that "the results support our hypothesis that working on screen is highly sensitive to task characteristics that signal legitimacy for shallow processing, and this affects both metacognitive and cognitive processes" (23017, p.70), possibly as a result of on-screen approaches used for reading email, social network posts, etc; this reinforces the importance of briefing on-screen readers about the task required of them, and explicitly suggesting they apply a particular form of engagement; the research concludes that "demonstrated that using task-inherent cues which call for depth of processing, or avoiding those that legitimate shallow processing, may make the difference between perpetuating screen inferiority and overcoming it, or even achieving screen superiority" (2017, p.71)

- The article demonstrates that the reading approach used for on-screen reading can be positively manipulated by emphasising deep engagement. Over-confidence is clearly an ongoing factor in on-screen reading. This article demonstrates how it can be managed.

Terpend,

R., Gattiker, T. F., & Lowe, S. E. (2014). Electronic textbooks:

Antecedents of students’ adoption and learning outcomes. Decision Sciences Journal of Innovative Education,

12(2). https://doi.org/10.1111/dsji.12031

- The study considers students' acceptance of e-texts, and whether the choice of e-text influences grades

- Survey of approximately 20000 students across the US

- Perceived ease-of-use and price are leading influencers toward e-texts

- Hard-copy text users received higher grades, but not at a level of significance

- "Students are more likely to purchase an e-text if they are comfortable reading on a screen and if they perceive that both buying and using an e-text are easy" (2014, p.162)

- The authors found that the price at which all students would prefer a printed text over an electronic one is around 111.59% of the e-text price; they also found that "10 percent of individuals will still adopt the hardcopy text even if it is priced at 3.5 times the e-text" (2014, p.164)

- The article suggests that e-texts can be confidently adopted by professors, but cautions that not all students will appreciate it!

Van Horne,

S., Russell, J. eun, & Schuh, K. L. (2016). The adoption of mark-up tools

in an interactive e-textbook reader. Educational

Technology Research and Development, 64(3).

https://doi.org/10.1007/s11423-016-9425-x

- The article considers student use of e-text mark-up tools

- Highlighting is the only tool used by over half of participants (n=274 convenience sample across 8 courses); tools included highlight, note, annotation, bookmark and question the instructor

- Use of the tools decreased as the semester progressed, though effective use was linked to grade outcomes

- The article concludes that students would benefit from an orientation to using e-textbook study tools

- No surprises here.

Young, J.

(2014). A study of print and computer–based reading to measure and compare

rates of comprehension and retention. New

Library World, 115(7–8). https://doi.org/10.1108/NLW-05-2014-0051

- The study compares comprehension and retention from reading on-screen and print (n=11 university students, with 'thick' descriptions); findings are "the participants demonstrated functional equivalency in both media, but they had a preference for print" (2014, p.376)

- Findings also suggest that on-screen articles should follow a similar structure and style than do print ones

- Readings were substantial, including articles from The New Yorker, The Economist, and The Guardian; after each reading participants completed a questionnaire about the content they had just read

- It was found that "there was no measurable difference between the print and electronic reading processes" (2014, p.384)

- The fixed nature of the text (print vs editable web page) was an issue for some participants, determining how they engaged with the text; the internet is seen as more immediate and up to date, but preference is for print

- An interesting article, more might have been said about the participants. NSD, but a very small sample.

Zhang, Y.,

& Kudva, S. (2014). E-books versus print books: Readers’ choices and

preferences across contexts. Journal of the

Association for Information Science and Technology, 65(8). https://doi.org/10.1002/asi.23076

- The paper overviews preferences of 2986 US respondents to a Reading Habits Survey

- E-book adoption is influenced by "the number of books read, the individual’s income, the occurrence and frequency of reading for research topics of interest, and the individual’s Internet use, followed by other variables such as race/ethnicity, reading for work/school, age, and education" (2014, p.1695)

- E-books are unlikely to displace print books, as the two forms have different affordances and audiences; many (19.6% of readers) read both

- Education and income levels determine e-book preference (in both cases, more means a greater preference); readers of e-books and both e-books and print read more frequently than print-only readers

- "the average number of books read by people who read both print and e-books is 25.75 books, as compared to 15.75 and 10.94 for those who only read print books and e-books, respectively" (2014, p.1702)

- "Ultimately, for those who read, content matters more than medium" (2014, p.1705)

- Another demographically interesting paper for e-book adoption and preference, confirming that higher levels of education are associated with on-screen reading (see also Mizrachi et al 2018 above). This points toward teaching effective on-screen reading as a useful and transferable academic skill.

Thanks for the information. Hope devotes will be careful after reading this post.Regards.

ReplyDeletecerego

Thanks for the comprehensive review. I wanted to add a detail on the question you raised for the Lenhard et al. paper. The students could correct mistakes on paper, but not in the CB condition. However, they did not do so. On paper, there are hardly any corrections at all an consequently, this difference does not explain the mode effects. Best regards, Wolfgang Lenhard

ReplyDeleteThanks Wolfgang, that's a useful clarification. You may be interested in the updated version of my synthesis paper, http://www.jofdl.nz/index.php/JOFDL/article/view/347. I hope you're continuing with the research!

DeleteThis comment has been removed by a blog administrator.

ReplyDelete